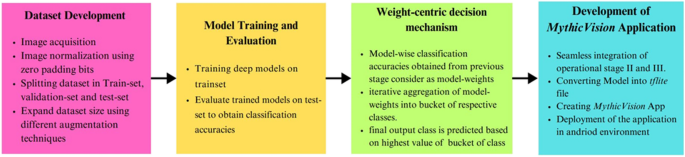

This section presents a succinct description of step-wise development of the framework. The four steps of the developed framework are as follows: (i) The development framework commences with the acquisition, selection, and curation of images featuring Indian deities for the creation of the dataset. Subsequently, the developed dataset is partitioned into training and testing sets. (ii) In the next step, four state-of-the art deep models viz. MobileNet, ResNet, EfficientNet, and GoogleNet have been trained using train-set and evaluated on test-set to obtain the model-wise classification accuracy. (iii) The classification accuracies obtained from different models serve as the foundation for a novel weight-centric decision mechanism. The weight-centric approach prioritizes over individual model accuracy, aiming to improve the overall accuracy and reliability of the framework (iv) Finally, the operational modules of (ii) and (iii) have been seamlessly integrated into the MythicVision mobile application. Designed application empowers users to acquire concise descriptions of Indian deities from real-time input images using their fingertip. The step-wise development of the framework is depicted in Fig. 1. Moreover, some salient features of the designed applications have been highlighted in this section.

A visual representation illustrates the various stages of the developed application framework. It starts with the creation of the initial dataset, followed by the training and evaluation of deep models using the newly developed dataset. Then, implementation of a weight-centric decision mechanism that bolsters the classification accuracy. Finally, the mobile application is developed, enabling users to obtain detailed descriptions from real-time images of Indian deities.

Dataset preparation

The preparation of the dataset for reported work assumed a crucial role given the limitation of existing datasets in Indian mythology. This section provides an in-depth overview of the dataset creation process, including data collection, labeling, and preprocessing steps undertaken to ensure high-quality, representative data for training and evaluation.

Dataset acquisition and image normalization

With a set of ten distinct deities, 3000 images (300 per class) are manually collected from various sources viz. scene images (book covers, posters, idols) and web source36,37. Attention has been given to ensure that the collected images represent scene images, capturing a range of unique contexts, and are free from duplicates. An overview of the dataset size collected from various sources is provided in Table 2. This approach enhances the dataset’s diversity and supports more robust model training. Then these images of different resolutions were all resized to 224 × 224 pixels so that they all are of same resolution thus suitable to be fed into the models. These images were also zero padded to prevent losing their original aspect ratio. The dataset was then split into train, validation, and test set with a ratio of 70:20:10 respectively.

Dataset augmentation

A set of augmentation techniques has been applied to the images to increase the amount of training data to avoid overfitting so that the model can generalize better to unseen data. These augmentation steps include:

-

Rotation—Rotates images at different angles, helping the model recognize objects at various orientations.

-

Shearing—Skews the image along one axis, making the model better at detecting objects that might appear stretched or tilted in certain perspectives.

-

Translation—Moves the image slightly in different directions (up, down, left, or right), training the model to recognize objects even if they are not perfectly centered.

-

Flipping—Mirrors the image horizontally or vertically, helping the model generalize to mirrored versions of objects, which is useful for detecting symmetrically structured features.

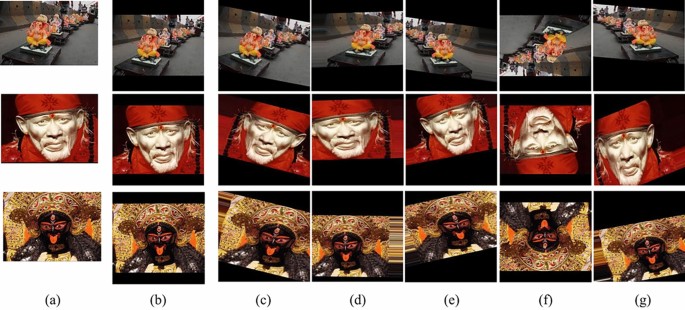

Within the dataset, 800 images were assigned for validation, while 200 images were designated for testing purposes while the remaining 2000 images (200 per class) underwent a transformative augmentation process. This process included a 20-degree rotation, 180-degree rotation, flipping, sharing and translation effectively generating 5 new images as shown in Fig. 2. Consequently, around 10,000 images were allocated for training purposes for the subsequent image classification task as shown in Table 3. Thus, the in-house dataset encompasses a total of 10,970 images.

Development of the dataset and extension of dataset size using different augmentation techniques. (a) Original source images, (b) corresponding normalized images of 224 × 224 pixels dimensions using zero padding bits, and (c–g) corresponding replicated image by applying different augmentation techniques like 20-degree rotation, shearing, translation, 180-degree rotation, and flipping respectively.

Complexity of the dataset

The self-curated dataset presents numerous challenges in its preparation, including significant variability in features, posture, and environmental factors, which require extensive preprocessing and careful dataset curation. These challenges include:

-

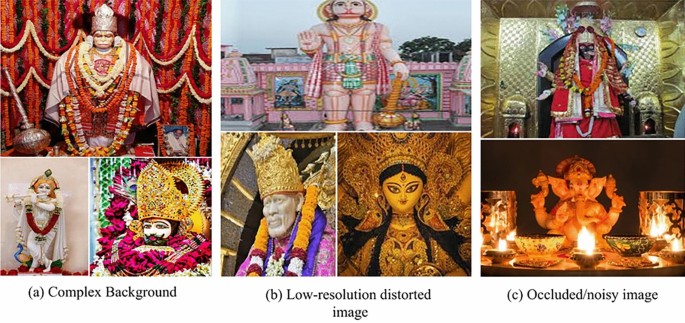

Complex background Some scene deity images often feature intricate, high-contrast backgrounds as seen in Fig. 3a that can negatively impact the framework’s performance, highlighting the need for efficient solutions to address this challenge.

-

Low-resolution and distorted images Images may be blurred or distorted, especially in cases where an oil lamp is placed in front of the idol, leading to reduced image clarity and potential loss of detail as seen in Fig. 3b.

-

Occluded/noisy images The presence of garlands and accessories on idols, objects hiding deities, and unnecessary background capture can introduce unnecessary noise as seen in Fig. 3c, potentially affecting the framework’s ability to accurately recognize and classify the deity.

Some sample images demonstrate the complexity and diversity of the dataset. (a) Images with complex background, (b) low-resolution and distorted images, (c) occluded/noisy images.

These complexities have been carefully considered and addressed during the dataset preparation and preprocessing stages to ensure optimal model performance.

Employed deep models

This section provides a detail insight on employed deep learning models viz. MobileNet, Resnet, EfficientNet, and GoogleNet with their layer-wise architecture and working principle.

Strategy behind model selection

To address the unique challenges of classifying Indian deities, selecting the right models is crucial. Each of the following models are chosen for its specific strengths in image classification, ensuring accuracy, efficiency, and adaptability to various devices. A brief outline of the employed models and their favourable scenarios are mentioned in Table 4.

Each of these models are further explained in detail in the following sections, providing a comprehensive understanding of their unique contributions to the classification of Indian deities.

Architecture and working principle of employed deep models

MobileNetV2

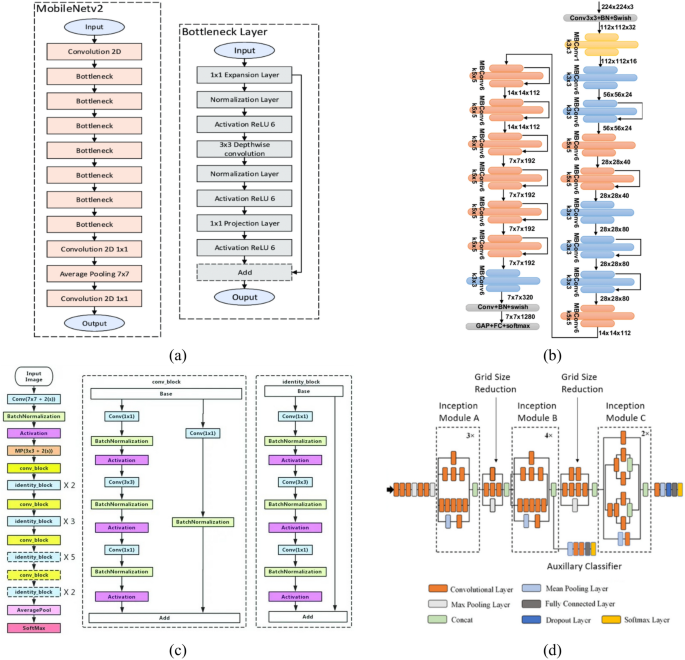

MobileNetV238,39 is designed to be lightweight and efficient, making it suitable for various real-time applications on resource-constrained devices. The architecture comprises of Convolution2D, bottleneck and average-pooling layers. The Bottleneck layer consists of 1 × 1 expansion layer, Normalization layer, 3 × 3 Depth-wise convolutions, 1 × 1 projection layers and activation layer consisting of ReLU. The bottleneck layer’s function is to add computation modules and force the network to learn more compact data in fewer layers thus saving computational time. Figure 4a40 illustrates the layer wise architectural view of the MobileNetv2 framework.

Layer-wise architecture of employed deep models. (a) MobileNetv2, (b) EfficientNetB0, (c) ResNet-50, and (d) Inceptionv3 respectively.

EfficientNetB0

The main architecture of EfficientNetB041 consists of stacked blocks, each having MobileNetV2-like inverted residual structures (MBConv) as seen in Fig. 4b42. The architecture uses a unique compound scaling method, scaling the network’s depth, width, and resolution uniformly. The model adjusts depth, width, and resolution using scaling factors (α, β, γ). This optimizes the model for different computational resources. Balancing accuracy and computational cost, EfficientNetB0 is a versatile and scalable choice, suitable for various applications from mobile devices to resource-constrained environments.

ResNet-50

ResNet-5043 is a deep neural network architecture comprises of 48 convolutional layers, one average pooling layer, and one fully connected layer. It utilizes 3-layered bottleneck blocks to facilitate feature extraction and model training. The resnet-50 architecture is depicted in Fig. 4c44. ResNet-50 is a computationally intensive model, with approximately 3.8 billion Floating Point Operations (FLOPs). This high level of computational complexity is one of the reasons behind its remarkable performance in various computer vision tasks, particularly image classification.

GoogleNet (Inception V3)

Inception V345 model comprises a total of 42 layers. It comprises an initial stem block for feature extraction, followed by a series of diverse Inception modules and Grid Size Reductions. These modules leverage parallel paths with different kernel sizes, including 1 × 1, 3 × 3, and 5 × 5 convolutions, as well as pooling operations, to capture multi-scale features efficiently. Grid Size Reduction blocks are employed to decrease spatial dimensions, and auxiliary classifiers aid in mitigating the vanishing gradient problem during training. The InceptionV3 auxiliary classifier enhances training by offering an extra path for gradient flow, serving as regularization, and promoting the learning of valuable features in intermediate layers to enhance model performance. The architecture is finalized with global average pooling, fully connected layers, and a SoftMax output layer for classification. The architecture of the network is illustrated in Fig. 4d46.

Weight-centric decision mechanism

The Weight-Centric Decision Mechanism is an approach that leverages model-specific test accuracy scores to assign weighted importance to each model in a multi-model framework. By factoring in these accuracy-derived weights, the mechanism aims to increase the likelihood of correct classifications across the framework by favoring models with higher accuracy scores. The selection criteria for the weights, working principle of the mechanism and its significance are reported in the following sub-sections.

Weight computation

Four individual models have been trained on a train dataset and tested on a test dataset. After the models are tested individual test accuracy scores are collected which acts as the weights for the weight-centric mechanism. The model with higher test accuracy has a higher likelihood of classifying the image correctly than the model with less test accuracy. Thus, the model with higher test accuracy gets a higher weightage than models with less test accuracy. The weight for each model wi is calculated as follows:

$$w_i = multiplier\left( m \right) \times test\_accuracy_i$$

(1)

where \(test\_accuracy_i\) represents the test accuracy score of the ith model, and the multiplier m is a scaling factor to adjust the influence of each accuracy score as seen in Eq. 1. This approach ensures that models with higher test accuracy are assigned greater weight, providing a double dependency on both the training and test sets for improved classification reliability.

Weight-centric decision mechanism

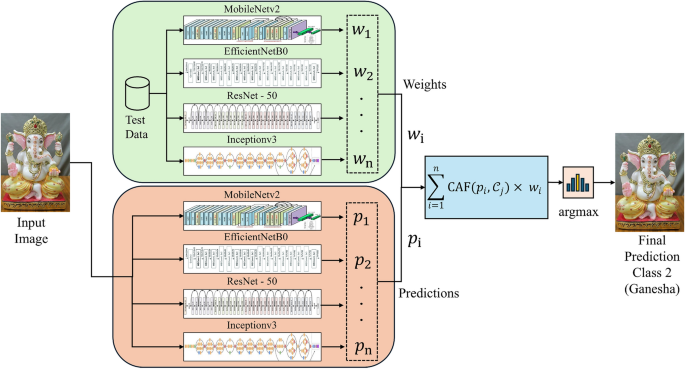

In the Weight- centric mechanism, the framework employs a strategic approach to enhance classification accuracy. The working principle of weight-centric decision mechanism is illustrated in Fig. 5. Each step involved in the weight-centric mechanism is mentioned as follows:

-

(i)

Prior to making predictions, four deep models have been evaluated on a carefully selected test set and model-wise test accuracy is obtained. These test accuracies subsequently act as weight values for that model denoted by \(w_1\), \(w_2\), …, \(w_n\), where \(n\) is the number of deep models used in the framework (viz. \(n\)=4). Moreover, probable classes of an input image are denoted as \(\mathcalC_1, \mathcalC_2, \dots , \mathcalC_m\) , where \(m\) is the number of probable classes of an input image object (viz. \(m=10\)).

-

(ii)

Let, class prediction of models denoted as \(p_1\), \(p_2\), …, \(p_n\) where \(n\) is the number of predictions (which is same as the number of models used).

-

(iii)

Each probable class of an input image object reserves its class-bucket. If the model predicted class as discussed in (ii) matches the probable class, the weight of that model as mentioned in (i) is iteratively added to the corresponding class-bucket. This process is repeated for all the remaining deep models. Finally, the class-bucket of a probable class with highest aggregated value selected as final output class denoted as \(\mathbbZ\). The weight-centric decision mechanism is illustrated in Eqs. 2–4.

-

(iv)

For instance, in the current study the number of decision classes is ten viz. Balaji, Durga Maa, Ganesha, Hanuman, Kali Maa, Khatu Shyam, Krishna, Sai Baba, Saraswati, and Shiva. Now, if a particular model predicts an image as Ganesha, the weight of that model is put into the bucket of Ganesha. Thus, at the end, the bucket with the maximum aggregated score provides the final output class of the image object.

-

(v)

The idea is to give a higher influence on models with higher test accuracies, assuming they are more reliable or accurate. This approach enhances the reliability and precision of the desired result, further improving the framework’s performance.

The weight-centric decision mechanism operates as follows: deep models are initially trained and evaluated on test data, producing classification accuracies. These accuracies serve as weights for each model in the decision mechanism. In real-time, an input image is introduced to the system, and each trained model generates predictions. After model-wise predictions, model-weights are iteratively added into the corresponding buckets of respective predicted classes, finally the prediction of final output class of an image is obtained based on aggregated values accumulated in the bucket of the decision classes.

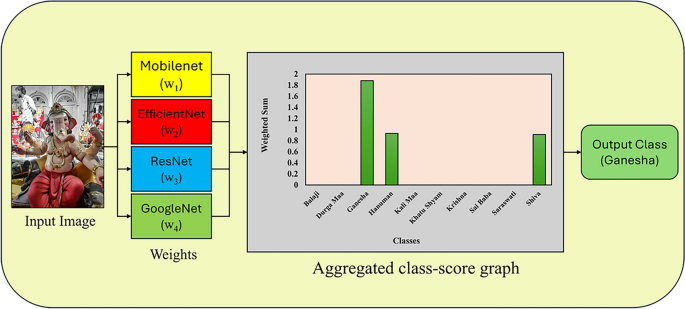

To illustrate this with an example, let us assume: MobileNet predicts Hanuman (Class 3), EfficientNet and ResNet predicts Ganesha (Class 2), and Inceptionv3 predicts Shiva (Class 9). Weight values are then assigned to each class based on their respective model accuracies. A weighted sum is computed of each prediction class. The final prediction becomes the class having maximum weighted sum as shown in Fig. 6.

$$\mathbbZ = argmax_m \mathcalO\left( \mathcalC_j \right)$$

(2)

Pictorial representation of final prediction generated using weight-centric decision mechanism.

Here, \(\mathcalO(\mathcalC_j)\) is denoted as aggregated class-score of jth probable class, where \(1\le j\le m\).

$$\mathcalO\left( \mathcalC_j \right) = \mathop \sum \limits_i = 1^n \textCAF\left( p_i , \mathcalC_j \right) \times w_i$$

(3)

Here, if prediction of ith model denoted as \(p_i\) matches with jth probable class (i.e., \(\mathcalC_j\)), then weights of the ith model i.e., \(w_i\), will be aggregated to the class-bucket. The CAF is denoted as class-affinity-factor, which is mathematically shown in Eq. 4.

$$\textCAF\left( p_i , \mathcalC_j \right) = \left\{ \beginarray*20l 1, \hfill & if\; p_i = = \mathcalC_j \hfill \\ 0, \hfill & else \hfill \\ \endarray \right.$$

(4)

Significance of weight-centric decision mechanism

The weight-centric mechanism stands out as a superior alternative to the conventional majority-based voting approach. In scenarios where the majority-based approach can lead to arbitrary assumptions and inaccurate classifications, the weight-centric decision mechanism excels. By assigning customized weights and giving priority to models showing better performance, it addresses the challenges of intricate data patterns and minority classes. This adaptive approach ensures accurate and informed decisions even in complex situations, ultimately enhancing classification accuracy and result reliability as shown in Table 5.

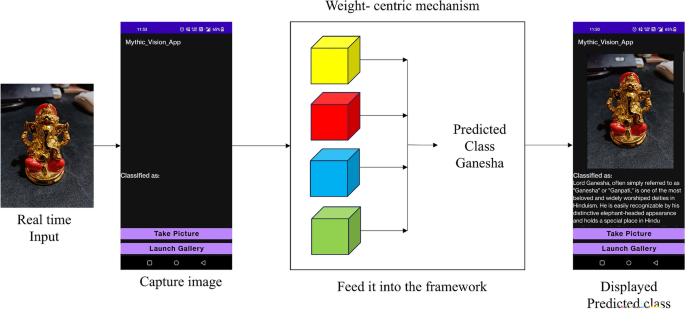

Development of MythicVision application

The main objective of the current research is to develop a convenient and user-friendly image classification application for identification of real-time deity images and provide a detail description on that. Therefore, the whole developed framework discussed in “Dataset preparation”, “Employed deep models”, “Weight-centric decision mechanism” sections (i.e., dataset creation, model selection, and the weight-centric approach) has been seamlessly integrated into the designed mobile application named MythicVision. The operational flow of the developed MythicVision application is illustrated in Fig. 7. The application has been developed through the conversion of the entire framework into a TensorFlow Lite file with the help of TensorFlow library. Then, it is exported to Android Studio, a dynamic platform for application development, where a user-friendly application with a basic User Interface (UI) interface has been developed. All such libraries and frameworks used to develop MythicVision are listed in Table 6. Within this application, users encounter an interface featuring two essential buttons. The “Take Pictures” button activates the device’s camera, enabling users to capture real-life images that align with their exploration of Indian mythology. The “Launch Gallery” button provides a convenient portal for users to access their device’s image gallery and select a picture they may have captured previously. The user initiates the process by capturing an image through the application. This image is subsequently channeled into the integrated models, where the deep learning models process it, where the weight-centric mechanism is thoroughly applied. The developed application then presents the user with the predicted class corresponding to the captured image, along with comprehensive information related to the recognized deity as shown in Fig. 7.

Overall working principle of designed MythicVision mobile application for identification of deities from real-time image. The entire developed image classification framework is integrated to the designed application. Initially, an image is captured in real-time by designed MythicVision application integrated with the built-in mobile camera and classified using the developed framework. Subsequently, based on the classified image, a comprehensive description of the specific deity (output class) is furnished.

Salient features of MythicVision

The MythicVision app is designed to enhance the experience of users by blending technology with cultural exploration. With a focus on accessibility, interactivity, and cultural preservation, it aims to provide an enriching and educational tool for anyone interested in learning about Indian mythology and deities.

-

Cross-platform Application The developed application software is compatible with Android-based mobiles, PCs, tablets, and other devices.

-

Temple Location Data As users scan deities through the app, an enhancement could include providing details about the nearest temples associated with the recognized deities.

-

Multilingual Support Extend the software to provide information in various languages to cater for the diverse range of tourists.

-

Collaborative Platform Create a community-driven platform where users can contribute. additional information and stories about detected deities.

-

Expansion to Other Cultures the developed application may detect and classify deities from different cultures around the world.

-

Language Diversity Expand the software to offer information in different languages, making it accessible to a wider range of tourists from around the world.

-

Interactive Quizzes Integrate interactive quizzes and games within the software to make learning about Indian mythology even more engaging and fun.

User feedback and recommendations

Gathering user feedback is a critical step in ensuring the system’s accessibility, usability, and overall effectiveness in the field of cultural exploration. Initial feedback from users highlighted the following key areas of improvement:

-

Incorrect upload errors Users came across errors when uploading corrupt or incorrect format of image. This can be rectified by handling such errors and notifying the user to upload the correct format (jpeg, png, e.t.c) of image.

-

Easy navigation controls Users appreciated the simple navigation controls of the application, particularly for uploading deity images with a single click of a button.

-

Incorporating a broader range of deities Some users suggested adding more local deities to the MythicVision framework to enhance its inclusivity and cultural representation.

This feedback is crucial for assessing the robustness of our framework and will be integrated into future updates to enhance the system’s accessibility and reliability for all users.

Cultural impact of MythicVision

MythicVision plays an important role in connecting people with the rich cultural heritage of India. By blending modern technology with ancient traditions, the app has the potential to make a positive impact on how we engage with and understand mythology. Some of the significant impact MythicVision have on culture of India is as follows:

-

Preserving cultural heritage The MythicVision app preserves Indian mythology by making information about deities accessible, ensuring this knowledge is passed down to future generations.

-

Increasing cultural awareness MythicVision helps foreign tourists to learn about Indian culture with a simple click of a button, promoting a better understanding of its mythology and traditions.

-

Promoting interfaith discussion By recognizing deities from different cultures, the app promotes respect and understanding across religions, encouraging dialogue and shared learning.

-

Supporting local communities The app provides information about nearby temples and cultural sites, which can boost interest and support for local heritage, benefiting the community.

In these ways, MythicVision not only educates users about various Indian deities but also helps preserve and share the cultural richness of India and the world.

link