We demonstrate the capability and potential of our proposed one-shot learning method through various problems, and discuss choices of the local solution operator \(\widetilde{{\mathcal{G}}}\), variations in Δf, effectiveness of our method, different mesh resolutions, and generalization. We first introduce the problem setup of learning solution operators for PDEs using one-shot learning method and then present the results.

Learning solution operators for PDEs

We consider a physical system governed by a PDE defined on a spatio-temporal domain \(\Omega \subset {{\mathbb{R}}}^{d}\):

$${\mathcal{F}}[u({\bf{x}});f({\bf{x}})]=0,\quad {\bf{x}}=({x}_{1},{x}_{2},\ldots,{x}_{d})\in \Omega$$

with suitable initial and boundary conditions

$${\mathcal{B}}(u({\bf{x}}),{\bf{x}})=0,$$

(1)

where u(x) is the solution of the PDE and f(x) is a forcing term. The solution u depends on f, and thus we define the solution operator as

$${\mathcal{G}}:f({\bf{x}})\mapsto u({\bf{x}}).$$

For nonlinear PDEs, \({\mathcal{G}}\) is a nonlinear operator.

In many problems, the PDE of a physical system is unknown or computationally expensive to solve, and instead, sparse data representing the physical system is available. Specifically, we consider a dataset \({\mathcal{T}}={\{({f}_{i},{u}_{i})\}}_{i=1}^{| {\mathcal{T}}| }\), and (fi, ui) is the i-th data point, where \({u}_{i}={\mathcal{G}}({f}_{i})\) is the PDE solution for fi. Our goal is to learn \({\mathcal{G}}\) from the training dataset \({\mathcal{T}}\), such that for a new f, we can predict the corresponding solution \(u={\mathcal{G}}(f)\). When \({\mathcal{T}}\) is sufficiently large, then we can learn \({\mathcal{G}}\) straightforwardly by using neural networks, whose input and output are f and u, respectively. Many networks have been proposed in this manner such as DeepONet12 and Fourier neural operator19. In this study, we consider a very challenging scenario where we have only one data point for training, i.e., one-shot learning with \(| {\mathcal{T}}|=1\), and we let \({\mathcal{T}}=\{({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\}\). Note that here “one-shot” represents one input-output data pair in the context of operator learning.

One-shot learning method based on the principle of locality

It is impossible in general to learn the PDE solution operator \({\mathcal{G}}\) from a single data point. To address this challenge, here we consider that \({\mathcal{T}}\) is not specified, and we can select \({f}_{{\mathcal{T}}}\). In addition, instead of learning \({\mathcal{G}}\) for the entire input space, we only aim to predict \({\mathcal{G}}(f)\) in a neighborhood of some f0.

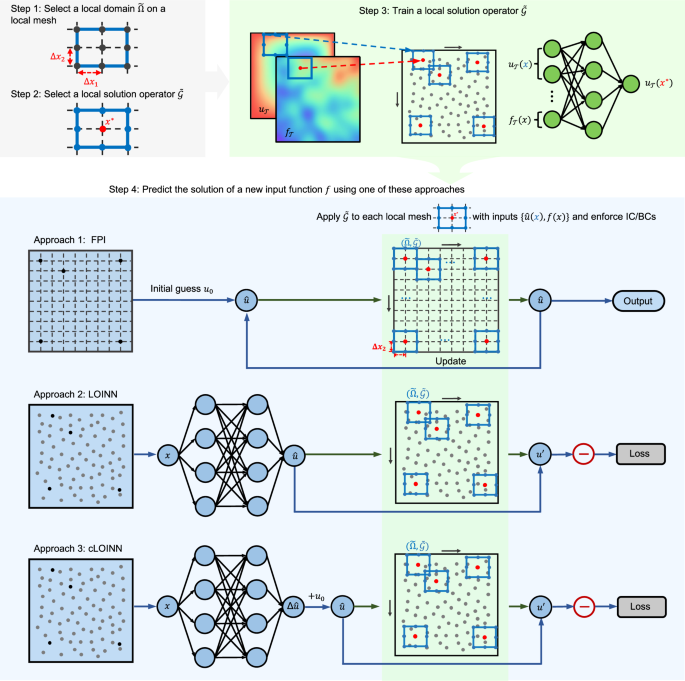

To overcome the difficulty of training a machine learning model based on only one data point, we leverage the principle of locality that (partial) derivatives in the PDEs are mathematically defined locally, i.e., the same PDE is satisfied in an arbitrary-shaped small domain inside Ω. Based on this fact, instead of considering the entire computational domain, we consider a “canonical” small local domain \(\tilde{\Omega }\), and we define a local solution operator \(\tilde{{\mathcal{G}}}\) at \(\tilde{\Omega }\). In our method, we can place \(\tilde{\Omega }\) at any specific location inside Ω, and each placement/realization leads to one training point for \(\tilde{{\mathcal{G}}}\). In this way, we could easily generate a large training dataset for \(\tilde{{\mathcal{G}}}\). Therefore, we transform the one-shot learning of \({\mathcal{G}}\) into a classical learning of \(\tilde{{\mathcal{G}}}\). Once \(\tilde{{\mathcal{G}}}\) is well trained, we predict \({\mathcal{G}}(f)\) for a new f by applying \(\tilde{{\mathcal{G}}}\) as a constraint at arbitrary local domains of the PDE solution \(u={\mathcal{G}}(f)\). We enforce IC/BCs in this process by hard or soft constraints, which ensure that the predicted solutions are not only locally accurate but also globally consistent across the entire domain. Specifically, our method includes the following four steps (Fig. 1). The details of each step can be found in “Methods” section.

(Step 1) We select a suitable polygon, such as a rectangle, on a local mesh with step size Δx1 and Δx2, and thus define a local domain \(\tilde{\Omega }\) (the black nodes). (Step 2) We select a target mesh node x* and define a local solution operator \(\tilde{{\mathcal{G}}}\). (Step 3) We learn \(\tilde{{\mathcal{G}}}\) using a neural network from a dataset constructed from \({\mathcal{T}}=({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\). (Step 4) For a new PDE condition (i.e., a new input function f), we utilize the pre-trained \(\tilde{{\mathcal{G}}}\) to find the corresponding PDE solution by using one of the following approaches. (Approach 1, FPI) We consider points on an equispaced global mesh. Starting with an initial guess u0(x), we apply \(\tilde{{\mathcal{G}}}\) iteratively to update the PDE solution until it is converged. (Approach 2, LOINN) We use a network to approximate the PDE solution. We apply \(\tilde{{\mathcal{G}}}\) at different random locations to compute the loss function. (Approach 3, cLOINN) We use a network to approximate the difference between the PDE solution and the given u0(x).

-

Select a “canonical” local domain \(\tilde{\Omega }\). We consider a virtual background equispaced mesh and select a polygon on this local mesh. We denote the set of all the mesh nodes that lie on the edges and within the interior of the chosen polygon (marked as black nodes in Fig. 1) as \(\tilde{\Omega }\). We show a general choice of \(\tilde{\Omega }\) in Fig. 2a (left) by black nodes.

Fig. 2: Selecting local domains and learning local solution operators.

a A general choice of the local domain \(\tilde{\Omega }\) is a set of nodes on a polygon. \(\tilde{\Omega }\) can have different shapes and sizes according to a specific PDE. A local solution operator \(\tilde{{\mathcal{G}}}\) is defined on \(\tilde{\Omega }\). b–f Examples of local domains and local solution operators used in this paper. g, h When learning \(\tilde{{\mathcal{G}}}\), the training points based on \(\tilde{\Omega }\) are either (g) selected on a global mesh or (h) randomly sampled.

-

Select a local solution operator \(\tilde{{\mathcal{G}}}\). We choose a specific location x* (the red node in Fig. 1) from the local domain \(\tilde{\Omega }\) in step 1, and then the other points \({\tilde{\Omega }}_{{\rm{aux}}}=\{{\bf{x}}\in \tilde{\Omega }| {\bf{x}}\ne {{\bf{x}}}^{*}\}\) are called “auxiliary points” (the blue nodes in Fig. 1). We construct a local solution operator

$$\tilde{{\mathcal{G}}}:\left(\{u({\bf{x}}):{\bf{x}}\in {\tilde{\Omega }}_{{\rm{aux}}}\},\{f({\bf{x}}):{\bf{x}}\in \tilde{\Omega }\}\right)\mapsto u({{\bf{x}}}^{*}).$$

This local solution operator \(\tilde{{\mathcal{G}}}\) is learned by a fully-connected neural network that takes u values of auxiliary points and f values in \(\tilde{\Omega }\) as inputs and output u values of x*, which captures the local relationship of the PDE. The choice of the shape and size of \(\tilde{\Omega }\) and \(\tilde{{\mathcal{G}}}\) is problem dependent. In Figs. 2b–f, we present several choices of \(\tilde{\Omega }\) and \(\tilde{{\mathcal{G}}}\) used in this paper. The principles for selecting the local domain \(\tilde{\Omega }\) and local solution operator \(\tilde{{\mathcal{G}}}\) are summarized as follows. First, the shape of \(\tilde{\Omega }\) should align with the dimensional structure of the data. For example, one-dimensional problems, \(\tilde{\Omega }\) could be a line segment, while in two-dimensional scenarios, it could be a polygon. Second, for time-dependent system, in additional to spatial domain, \(\tilde{\Omega }\) should also include past temporal states.

-

Train the local solution operator \(\tilde{{\mathcal{G}}}\). Utilizing the dataset \({\mathcal{T}}=({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\), we place the local domain \(\tilde{\Omega }\) at different locations of Ω, which can be either a global structured mesh or randomly sampled locations (Figs. 2g and h). This process generates many input-output data pairs for training \(\tilde{{\mathcal{G}}}\).

-

Predict the solution u for a new input function f with suitable IC/BCs. For a new PDE condition f, we choose one of the three approaches to find the global PDE solution using the pre-trained local solution operator \(\tilde{{\mathcal{G}}}\): fixed-point iteration (FPI), local-solution-operator informed neural network (LOINN) or local-solution-operator informed neural network with correction (cLOINN).

FPI is a mesh-based approach, and we can only use the structured equispaced global mesh to predict the solution. In contrast, with LOINN and cLOINN, it is possible to train the neural networks using randomly sampled data points in the domain. When we use equispaced global grid in LOINN and cLOINN, we denote the methods as LOINN-grid and cLOINN-grid, respectively. When we use randomly sampled point locations, we denote the methods as LOINN-random and cLOINN-random. The technical and implementation details of each step are provided in “Methods” section and the Supplementary Section S2.

Our numerical experiments cover a range of representative scenarios, including multi-dimensional, linear and nonlinear PDE systems, PDEs with f either as a source term or a coefficient field, and PDEs defined in a complex geometry. Notably, there are no existing methods to compare with for the problem we are addressing. In each experiment, we first obtain the training dataset via finite difference methods on an equispaced dense mesh. The parameters for generating datasets and the hyperparameters of pre-trained neural networks are listed in Supplementary Section S2. To test the developed method, we randomly sample 100 new f by f = f0 + Δf, in which Δf is sampled from a Gaussian random field (GRF) with a correlation length ltest = 0.1 and various amplitude σtest. We compute the geometric mean and standard deviation of the L2 relative errors for the 100 test cases (Table 1). For all experiments, the Python library DeepXDE3 is utilized to implement the neural networks.

Learning the solution operator for a linear PDE

We first demonstrate the capability of our method with a pedagogical example of a one-dimensional Poisson equation

$$\Delta u=100f(x),\quad x\in [0,1]$$

with the zero Dirichlet boundary condition, and the solution operator is \({\mathcal{G}}:f\mapsto u\).

As described in the one-shot learning method based on the principle of locality, we first need to select a “canonical” local domain \(\tilde{\Omega }\), which is a line segment in 1D case. We choose the simplest local solution operator using 3 nodes (Fig. 2b) \(\widetilde{{\mathcal{G}}}:\{u(x-\Delta x),u(x+\Delta x),f(x)\}\mapsto u(x)\) with Δx = 0.01 from \(\tilde{\Omega }\). The training dataset \({\mathcal{T}}\) only has one data point \(({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\) (Fig. 3a), where \({f}_{{\mathcal{T}}}\) is generated based on \({f}_{0}=\sin (2\pi x)\). Using the training dataset, we place \(\tilde{\Omega }\) at different locations to generate data points and train the local solution operator. For a new PDE condition f, we apply our trained \(\widetilde{{\mathcal{G}}}\) and enforce the zero Dirichlet boundary condition at x = 0 and x = 1 to find the solution. In Fig. 3c, we show examples of testing cases f = f0 + Δf with different σtest. When σtest is larger, there is a more significant discrepancy between f0 and f.

a The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solution \({u}_{{\mathcal{T}}}\). b The convergence of L2 relative errors of different approaches for various test cases. The shaded regions represent one standard deviation of the L2 relative errors from 100 test cases. c Testing examples of random f = f0 + Δf with Δf sampled from GRF of σtest = 0.02, 0.05, 0.10, 0.15 and ltest = 0.1. d Prediction example of different approaches for various test cases. e Prediction errors (the geometric mean and standard deviation) when training by frandom with amplitude σtrain and testing on functions with amplitude σtest. The length scales ltrain = 0.01 and ltest = 0.10 are fixed. f Prediction errors (the geometric mean and standard deviation) when training by frandom with different length scale ltrain and amplitude σtrain = 0.10, and testing on functions with amplitude σtest = 0.10 and ltest = 0.10.

We evaluate the performance of FPI, LOINN and cLOINN approaches on the grid data, as well as LOINN and cLOINN using randomly sampled data points (Fig. 3b). For FPI, LOINN-grid, and cLOINN-grid, we use a mesh with 101 equispaced points. For LOINN-random and cLOINN-random, we use 101 random points. We report the geometric mean of L2 relative errors of all cases in Table 1 for different σtest. As expected, the smaller σtest we use, the better the performance. When σtest = 0.02, all approaches achieve an L2 relative error of around 1%. In Fig. 3d, we show prediction examples of using FPI and cLOINN-random approaches. For this simple case, the results of using different approaches and data sampling are similar. It is observed that in this experiment, cLOINN converges faster than LOINN and FPI.

Discussion on the choice of training data

Given that we use only one training data point, the selection of \({f}_{{\mathcal{T}}}\) in \({\mathcal{T}}\) is critical. Ideally, \({f}_{{\mathcal{T}}}\) should exhibit patterns similar to those we aim to predict, yet contain some randomness to enrich the information to be extracted. In our experiments, we use \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+{f}_{0}(x)\), where \({f}_{{\rm{random}}}(x) \sim {\mathcal{GP}}(0,k({x}_{1},{x}_{2}))\) (see “Methods” section for more details). We now study the effect of \({f}_{{\mathcal{T}}}\) chosen with different amplitude σtrain and length scale ltrain different from the test datasets, as well as the influence of changing the general shape of training forcing function \({f}_{{\mathcal{T}}}\).

First, we explore how the amplitude σtrain influences prediction accuracy for various σtest (Fig. 3e). We randomly sample frandom with a fixed ltrain = 0.01 across different σtrain = 0.02, 0.05, 0.10, 0.15, and train one local solution operator for each σtrain. We then fix ltest = 0.10, and test 100 cases using σtest = 0.02, 0.05, 0.10, or 0.15. Here we only show the results of FPI, since performance of other methods is similar. We find that the errors are generally larger for test functions with larger σtest (i.e., higher variability). Furthermore, we observe that the prediction accuracy is robust against different σtrain.

Second, we are interested in how different ltrain impact the performance of local solution operators (Fig. 3f). We train one local solution operator for each ltrain = 0.005, 0.01, 0.05, 0.10, 0.15 and σtrain = 0.10, then test them using FPI on 100 test cases with σtest = 0.10 and ltest = 0.10. When ltrain is too small (e.g., 0.005) or too large (e.g., 0.15), the prediction errors tend to increase with large standard deviations. The reasonable range of ltrain for frandom is between 0.01 to 0.1 in this example. These results suggest that effective training data should have a length scale ltrain that introduce sufficient randomness without inducing overly rapid fluctuations.

Next, we study the influence of training forcing function \({f}_{{\mathcal{T}}}\). Instead of using local solution operators trained from the sine wave \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\sin (2\pi x)\), we consider three alternatives: (1) a phase-shifted sine wave, \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\sin (2\pi x+\pi )\) (Supplementary Fig. S3a), (2) a cosine wave, \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\cos (2\pi x)\) (Supplementary Fig. S3b), and (3) a fully random function \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)\) with σtrain = 1.0 and ltrain = 0.01 (Supplementary Fig. S3c). In both (1) and (2), we randomly sample frandom(x) with ltrain = 0.01 and σtrain = 0.10 for training local solution operators. We test them on 100 test cases with σtest = 0.10 and ltest = 0.10. For the phase-shifted sine wave, the overall shape remains similar but is flipped with respect to the x-axis. We observe a similar error 3.58 ± 6.40% with a larger standard deviation, compared to 3.71 ± 2.96% for the original sine wave. For the cosine wave, the phase is different, and the error is 8.73 ± 2.78% which is larger. For the fully random function without any information of f0, the error further increases to 9.68 ± 0.73%. These results suggest that variations of the training data can affect predictive accuracy. Nonetheless, our method performs reasonably successful, demonstrating robustness to such changes.

Effectiveness of our method and comparison with the baselines

Our approach solves a unique challenging scenario where traditional numerical methods, which depend on known PDE forms, and traditional data-driven methods, which typically require large datasets, do not apply. There are no existing methods directly comparable to ours for this setting. Nonetheless, it would be helpful to provide baselines as comparisons.

Since a new test case is f = f0 + Δf, u0 in the data pair (f0, u0) can be used as a baseline. Notably, u0 serves as the initial guess of FPI approach, so the convergence of the L2 relative errors in FPI itself reflects the effectiveness of our approach. Take the 1D Poisson equation as an example, when σtest = 0.10, the L2 relative errors for the baseline u0 is 16.29 ± 10.77%, which is significantly higher than the errors achieved by our method (all below 5% in Fig. 3e).

We also consider DeepONet12 as the second baseline. With only one data pair \(({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\), the error in predicting u from f with σtest = 0.10 using DeepONet is very large at 20.73 ± 14.02%. Even when using both (f0, u0) and \(({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\) as the training data, the error remains high at 15.83 ± 10.26%.

Learning the solution operator for a time-dependent PDE

We then consider a time-dependent linear diffusion equation

$$\frac{\partial u}{\partial t}=D\frac{{\partial }^{2}u}{\partial {x}^{2}}+f(x),\quad x\in [0,1],\quad t\in [0,1]$$

with zero boundary and initial conditions, where D = 0.01 is the diffusion coefficient. We aim to learn the solution operator \({\mathcal{G}}:f\mapsto u\) for a class of f = f0 + Δf with \({f}_{0}=0.9\sin (2\pi x)\).

We select the simplest local solution operator defined on a local domain with 4 spatial-temporal nodes (Fig. 2d):

$$\widetilde{{\mathcal{G}}}:\{u(x,t-\Delta t),u(x-\Delta x,t),u(x+\Delta x,t),f(x,t)\}\mapsto u(x,t).$$

Since it is a time-dependent problem, we also include the previous temporal node. To generate the training dataset \({\mathcal{T}}\), we randomly sample \({f}_{{\mathcal{T}}}\) shown in Supplementary Fig. S2a. FPI and LOINN/cLOINN-grid use an equispaced mesh of 101 × 101, and LOINN/cLOINN-random use 1012 random point locations.

We test these approaches for Δf sampled from GRF with σtest = 0.1, 0.3, 0.5, and 0.8. The details of error convergence are shown in Supplementary Fig. S2b. For a fixed σtest, FPI and cLOINN-grid both work well and outperform LOINN-grid (Table 1). LOINN-random and cLOINN-random both achieve better accuracy than LOINN/cLOINN-grid (e.g., an L2 relative error smaller than 1% when σtest = 0.1). When σtest is increased from 0.1 to 0.8, all approaches can an achieve an L2 relative error smaller than 8%. In this experiment, we conclude that cLOINN performs better and converges faster than LOINN. Also, the performance of LOINN and cLOINN with randomly sampled points are better than that with mesh points. In Supplementary Fig. S2c, we show a test example for σtest = 0.8 and the predictions and pointwise errors using FPI, LOINN-grid, cLOINN-grid, LOINN-random, and cLOINN-random.

Learning the solution operator for a nonlinear PDE

We have shown the capability of our one-shot operator learning method on linear PDEs. Now we consider a nonlinear diffusion-reaction equation with a source term f(x):

$$\frac{\partial u}{\partial t}=D\frac{{\partial }^{2}u}{\partial {x}^{2}}+k{u}^{2}+f(x),\quad x\in [0,1],t\in [0,1]$$

with zero initial and boundary conditions, where D = 0.01 is the diffusion coefficient, and k = 0.01 is the reaction rate. The solution operator we aim to learn is \({\mathcal{G}}:f\mapsto u\), where f = f0 + Δf with \({f}_{0}=\sin (2\pi x)\).

We select the same \(\widetilde{{\mathcal{G}}}\) as the previous linear diffusion equation example (Fig. 2d), and randomly sample \({f}_{{\mathcal{T}}}\) shown in Fig. 4a to train a local solution operator. FPI and LOINN/cLOINN-grid use an equispaced mesh of 101 × 101, and LOINN/cLOINN-random use 1012 random point locations. We also test these approaches for Δf sampled from GRF with σtest = 0.1, 0.3, 0.5, and 0.8 (Fig. 4b). FPI and LOINN/cLOINN-random achieve better accuracy than the others (e.g., L2 relative error smaller than 0.5% when σtest = 0.1). When σtest is increased from 0.1 to 0.8, all the approaches can achieve an L2 relative error smaller than 5%. In Fig. 4c, we show a test example for σtest = 0.8 and the predictions and pointwise errors using FPI, LOINN-grid, cLOINN-grid, LOINN-random, and cLOINN-random.

a The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solution \({u}_{{\mathcal{T}}}\). b The convergence of L2 relative errors of different approaches for various test cases. c Prediction example of different approaches for a test case with σtest = 0.8. d L2 relative error of different test functions with σtest = 0.1, 0.3, 0.5, 0.8 when using different numbers of point locations to show the effect of mesh resolutions.

Generalization of the local solution operator to very different testing functions

We evaluate the generalization capability of the local solution operator \(\widetilde{{\mathcal{G}}}\), which is trained from the sine wave \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\sin (2\pi x)\) and the corresponding \({u}_{{\mathcal{T}}}(x)\). We test it on various new f, including (1) a phase-shifted sine wave, \(f=\sin (2\pi x+\pi )\) (Fig. 5a), (2) a cosine wave, \(f=\cos (2\pi x)\) (Fig. 5b), (3) a higher-frequency sine wave, \(f=\sin (3\pi x)\) (Fig. 5c), (4) a sine wave with very high frequency, \(f=2\sin (9\pi x)\) (Fig. 5d), (5) a sum of two sine waves at moderate and high frequencies, \(f=\sin (6\pi x)+\sin (14\pi x)\) (Fig. 5e), and (6) a sum of multiple weighted sine waves at different frequencies, \(f=0.8\sin (6\pi x)+0.6\sin (14\pi x)+0.4\sin (26\pi x)+0.2\sin (42\pi x)\) (Fig. 5f). The corresponding test errors for these cases are 0.18%, 2.19%, 1.80%, 6.01%, 5.20%, 2.66%, respectively. Despite these f have a wide range of frequencies and phases (very different from the training data), the prediction errors remain low. The results indicates that the local solution operator has a good generalizability and effectively captures the local relationship of the nonlinear diffusion-reaction equation.

a A phase-shifted sine wave, \(f=\sin (2\pi x+\pi )\). b A cosine wave, \(f=\cos (2\pi x)\). c A higher-frequency sine wave, \(f=\sin (3\pi x)\). d A sine wave with very high frequency, \(f=2\sin (9\pi x)\). e A sum of two sine waves at moderate and high frequencies, \(f=\sin (6\pi x)+\sin (14\pi x)\). f A sum of multiple weighted sine waves at different frequencies, \(f=0.8\sin (6\pi x)+0.6\sin (14\pi x)+0.4\sin (26\pi x)+0.2\sin (42\pi x)\). The first column is various new f; the second column is the corresponding ground truth u; the third column is the FPI prediction for each f; and the last column is the absolute error between the ground truth u and the prediction.

Generalization of the local solution operator to noisy testing input functions

We apply the same trained local solution operator \(\widetilde{{\mathcal{G}}}\) to the noisy testing functions of the cosine wave \(f=\cos (2\pi x)\). We corrupt the testing function with 5%, 10%, 20%, and 30% Gaussian noise (Supplementary Fig. S5a). The test errors increase with the noise level (Supplementary Fig. S5b). Notably, even when the added noise is 30%, the error remains relatively small (8.90%).

Discussion on the choice of training data

Similar to that in the Poisson equation example, we study the influence of training function \({f}_{{\mathcal{T}}}\). We consider three alternatives for \({f}_{{\mathcal{T}}}\): (1) a phase-shifted sine wave, \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\sin (2\pi x+\pi )\) (Supplementary Fig. S4a), (2) a cosine wave, \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\cos (2\pi x)\) (Supplementary Fig. S4b), and (3) a higher-frequency sine wave \({f}_{{\mathcal{T}}}(x)={f}_{{\rm{random}}}(x)+\sin (3\pi x)\) (Supplementary Fig. S4c). In all the cases, we randomly sample frandom(x) with σtrain = 0.10 and ltrain = 0.01 for training local solution operators. We evaluate performance on 100 test cases with σtest = 0.80 and ltest = 0.10. For the phase-shifted sine wave, the error slightly increases to 3.28 ± 2.18%, compared to 2.32 ± 1.32% for the original sine wave. The cosine wave, which differs in phase, yields a error of 3.99 ± 3.37%. For the higher-frequency sine wave, the error increases to 4.61 ± 3.44%. These results further demonstrate that the method is robust to variations in the training data.

One-shot operator learning on a coarse mesh

In all the previous examples, we use a local mesh with resolution Δx = Δt = 0.01 for learning the local solution operator \(\tilde{{\mathcal{G}}}\). In this section, we investigate the performance of our methods using different mesh resolutions when training \(\widetilde{{\mathcal{G}}}\) and predicting solutions in Ω. For FPI, LOINN-grid, and cLOINN-grid, we generate input-output pairs for training \(\tilde{{\mathcal{G}}}\) on a structured equispaced global mesh \(\hat{\Omega }\) with mesh size Δx = Δt = 0.01, 0.02, 0.04, 0.05, and 0.07 (i.e., the numbers of points are 1012, 512, 262, 212, and 162), which matches the local mesh resolutions. For LOINN-random and cLOINN-random, we randomly sample 1012, 512, 262, 212, 162 point locations in Ω. We compare L2 relative errors with different σtest and resolutions. With denser mesh resolutions or more points, all approaches perform better (Fig. 4d). Even using a coarse mesh of 0.05, our methods can still achieve errors around 10% for all cases, which demonstrates the computational efficiency of our proposed methods.

Learning the solution operator for a PDE with parametric coefficient fields

We illustrate the choice of local domain using an advection equation

$$\frac{\partial s}{\partial t}+a(x)\frac{\partial s}{\partial x}=0,\quad x\in [0,1],t\in [0,1]$$

with initial condition s(x, 0) = x2 and boundary conditions \(s(0,t)=\sin (\pi t)\), where a(x) is the velocity coefficient. We learn the solution operator mapping from the coefficient field to the PDE solution: \({\mathcal{G}}:a\mapsto s\) for a class of a = a0 + 0.1Δf with a0 = 1.

The training dataset \({\mathcal{T}}\) with one data point \(({a}_{{\mathcal{T}}},{s}_{{\mathcal{T}}})\) is shown in Fig.S6a. We use the local solution operator (Fig. 2c)

$$\widetilde{{\mathcal{G}}}:\{s(x,t-\Delta t),s(x-\Delta x,t),s(x-\Delta x,t-\Delta t),a(x,t)\}\mapsto s(x,t).$$

The training dataset \({\mathcal{T}}\) with one data point \(({a}_{{\mathcal{T}}},{s}_{{\mathcal{T}}})\) is shown in Fig. S6a. Since FPI and cLOINN-random have shown better performance compared to LOINN and cLOINN-grid in previous experiments, we only present the results of FPI and cLOINN-random here (Fig. S6b). FPI uses an equispaced mesh of 101 × 101, and cLOINN-random use 1012 random point locations. The errors of FPI and cLOINN-random both achieve less than 1% with σtest = 0.50 (Table 1), with FPI outperforming cLOINN-random slightly. When σtest is increased from 0.50 to 2.00, the L2 relative errors are smaller than 10%. We show a test example for σtest = 0.50 and the prediction using FPI and cLOINN-random approaches in Fig. S6c.

Analyzing the effect of local domain size for the 2D nonlinear Poisson equation

Next, we investigate the effect of the size of the local domains with a 2D nonlinear Poisson equation

$$\nabla ((1+{u}^{2})\nabla u)=10f(x,y),\quad x\in [0,1],y\in [0,1]$$

with zero Dirichlet boundary conditions. We aim to learn the solution operator \({\mathcal{G}}:f\mapsto u\) for a class of f = f0 + Δf with \({f}_{0}(x,y)=x\sin (y)\).

We choose two different local domains, including a simple local domain with 5 nodes (Fig. 2e)

$${\widetilde{{\mathcal{G}}}}_{1}:\{u(x,y-\Delta y),u(x-\Delta x,y),u(x+\Delta x,y),u(x,y+\Delta y),f(x,y)\}\mapsto u(x,y)$$

and a larger domain with 9 nodes (Fig. 2f)

$${\widetilde{{\mathcal{G}}}}_{2}:\{u(x,y-\Delta y),u(x-\Delta x,y),u(x+\Delta x,y),u(x,y \\+\Delta y),u(x-\Delta x,y-\Delta y),u(x-\Delta x,y+\Delta y)\},\\ \{u(x+\Delta x,y-\Delta y),u(x+\Delta x,y+\Delta y),f(x,y)\}\mapsto u(x,y).$$

For this example, the numerical solution is obtained via the finite element method.

The training dataset \({\mathcal{T}}\) with one data point \(({f}_{{\mathcal{T}}},{u}_{{\mathcal{T}}})\) is shown in Fig. 6a. We compare the results of FPI and cLOINN-random using \({\widetilde{{\mathcal{G}}}}_{1}\) and \({\widetilde{{\mathcal{G}}}}_{2}\) (Table. 1). FPI performs well, and the L2 relative errors achieve less than 2% when σtest = 0.05. Compared to FPI, the performance of cLOINN-random is worse but still acceptable when σtest is small, and the errors achieve less than 5% when σtest = 0.05. (Fig. 6b). For both FPI and cLOINN-random, the local solution operator \({\widetilde{{\mathcal{G}}}}_{2}\) outperforms \({\widetilde{{\mathcal{G}}}}_{1}\) for σtest = 0.05, 0.10, and 0.20. An example of the prediction using FPI and cLOINN-random approaches with \({\widetilde{{\mathcal{G}}}}_{1}\) is shown in Fig. 6c.

a The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solution \({u}_{{\mathcal{T}}}\). b The convergence of L2 relative errors of FPI and cLOINN-random for various test cases using \({\widetilde{{\mathcal{G}}}}_{1}\) and \({\widetilde{{\mathcal{G}}}}_{2}\). c Prediction example of different approaches for a test case with σtest = 0.05. d Local domains with 5, 9, 13, 25, 49, and 81 nodes and corresponding solution operators. e L2 relative errors of FPI for σtest = 0.20 for different local domain sizes, compared to the baseline error between u and u0 (black-dashed line). f Wall clock time (second) per epoch for training local solution operators for different domain sizes.

To deepen our understanding, we extend our analysis to four additional different local domains with 13, 25, 49, and 81 nodes, in addition to those with 5 and 9 nodes (Fig. 6d), and conduct experiments for σtest = 0.20 using FPI. Definitions of the local solution operators are detailed in Supplementary Section S8. Increasing the sizes of local domains potentially improves the accuracy of FPI, though improvements tend to plateau for domains larger than 49 nodes (Fig. 6e and Supplementary Table S4). However, the training time for the local solution operator increases with the number of nodes (Fig. 6f and Supplementary Table S4). This suggests that employing a local domain with more nodes can enhance accuracy, whereas a smaller domain may benefit from simpler implementation and reduced training times.

Learning the solution operator for a PDE defined in an irregular domain

Besides the regular domain, we also consider a 2D nonlinear Poisson equation in a square domain with a circle cutout of radius 0.2 (Fig. 7)

$$\nabla ((1+{u}^{2})\nabla u)=100f(x,y)$$

with zero Dirichlet boundary conditions. We learn the solution operator \({\mathcal{G}}:f\mapsto u\) for a class of f = f0 + Δf with \({f}_{0}(x,y)=x\sin (y)\).

a The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solution \({u}_{{\mathcal{T}}}\). b The convergence of L2 relative errors of FPI and cLOINN-random for various test cases. c Prediction example of different approaches for a test case with σtest = 0.05. d, e Test the method in a new geometry by using the local solution operator trained on the previous geometry. d The convergence of L2 relative errors of FPI and cLOINN-random for test cases with σtest = 0.10 for a smaller circle cutout. e Prediction example of different approaches for a test case.

We choose a simple local domain

$$\widetilde{{\mathcal{G}}}:\{u(x,y-\Delta y),u(x-\Delta x,y),u(x+\Delta x,y),u(x,y+\Delta y),f(x,y)\}\mapsto u(x,y)$$

with 5 nodes (Fig. 2e). For this example, the numerical solution is obtained using the finite element method. The domain is discretized using triangular elements, and the maximum size is 0.005.

The training dataset \({\mathcal{T}}\) is shown in Fig. 7a. When σtest = 0.05, the L2 relative errors achieve less than 3%. Also cLOINN-random performs slightly better than FPI while FPI converges faster, as the values of cLOINN-random solution near the circle boundary are more accurate. The comparison between these two is shown in Fig. 7b, and one example is shown in Fig. 7c. We have shown that our approaches can work well in this complex geometry.

Generalizing to a different geometry

To verify the generalization of our method, we now test the same Poisson equation in a square domain with a smaller circle cutout of radius 0.18. We use the same local solution operator \(\widetilde{{\mathcal{G}}}\) trained from the previous geometry with larger circle cutout. When σtest = 0.10, the L2 relative errors achieve less than 5% for FPI and cLOINN-random. The convergences of FPI and cLOINN-random are shown in Fig. 7d, and one prediction example is shown in Fig. 7e. This demonstrate that our method can generalize well on a smaller circle cutcut.

Learning the solution operator for a PDE system

We consider a diffusion-reaction system in porous media (x ∈ [0, 1], t ∈ [0, 1])

$$\frac{\partial {C}_{A}}{\partial t}=D\frac{{\partial }^{2}{C}_{A}}{\partial {x}^{2}}-{k}_{f}{C}_{A}{C}_{B}^{2}+f(x),\quad \frac{\partial {C}_{B}}{\partial t}=D\frac{{\partial }^{2}{C}_{B}}{\partial {x}^{2}}-2{k}_{f}{C}_{A}{C}_{B}^{2}$$

with initial conditions CA(x, 0) = CB(x, 0) = e−20x and zero Dirichlet boundary conditions, where D = 0.01 is the diffusion coefficient, and kf = 0.01 is the reaction rate. The solution operator is \({\mathcal{G}}:f\mapsto ({C}_{A},{C}_{B})\). Here we predict the solutions CA and CB for a new \(f={e}^{-\frac{{(x-0.5)}^{2}}{0.05}}+\Delta f\).

Since there are two outputs for this case, we consider the local solution operator (Fig. 2d)

$$\widetilde{{\mathcal{G}}}:\{{C}_{A}(x,t-\Delta t),{C}_{A}(x-\Delta x,t),{C}_{A}(x+\Delta x,t),{C}_{B}(x,t-\Delta t),{C}_{B} \\ (x-\Delta x,t),{C}_{B}(x+\Delta x,t),f(x,t)\}\mapsto ({C}_{A}(x,t),{C}_{B}(x,t)).$$

We show the training dataset \({\mathcal{T}}\) in Fig. 8a.

a The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solution \({C}_{A,{\mathcal{T}}}\) and \({C}_{B,{\mathcal{T}}}\). b The convergence of L2 relative errors of FPI and cLOINN-random for various test cases of CA and CB. c Prediction example of different approaches for a test case with σtest = 0.3.

In this experiment, FPI works well, and the L2 relative errors achieves less than 1% when σtest = 0.10. FPI performs better than cLOINN-random (Table 1). When σtest = 0.10, 0.30, the accuracy of cLOINN-random is smaller than 10%. It is shown that, in this example, FPI is not only more accurate than cLOINN-random, but also converge faster (Fig. 8b). To better understand why cLOINN performs poorly sometimes, we observe that, compared with higher-error test case, for the case with low prediction error, the training loss decreases more significantly and usually reaches a lower loss value by the end of training (Supplementary Fig. S7). This observation indicates that the neural network-based approach may encounter training difficulties, which leads to poor performance for certain test cases. Given the the similarity between our neural network-based approaches to physics-informed neural networks, the training difficulties may due to optimization difficulties associated with the PDE constraint and the spectral bias42,43. We show examples of CA and CB prediction using FPI and cLOINN-random approaches in Fig. 8c.

Application in spatial infection spread through heterogeneous populations

Problem setup

We present an application of our one-shot learning method to solve the spread of infectious diseases influenced by local spatio-temporal factors. Infectious diseases remain a major public health concern worldwide, particularly in the post-era of COVID-19. Mathematicians and epidemiologists have studied the the dynamics of infectious diseases and the spreading of the virus over the population. The SIR epidemic model44 is widely used to model the spread of infectious diseases. The population is divided into three disjoint classes: susceptible (S), infected (I), and recovered (R), where susceptibles can be infected by those already infected and subsequently recover, and recovered class are immune to the disease but lose immunity over time. Various classes of individuals and their spatial interactions have been studied actively, and PDE models are used to represent different scales and interactions within the population45,46,47. Specifically, we consider the PDE model in47 (x ∈ [0, L], t ∈ [0, T]):

$$\frac{\partial S}{\partial t}=-D(x)\beta S\frac{{\partial }^{2}I}{\partial {x}^{2}}-\beta SI,\quad \frac{\partial I}{\partial t}=D(x)\beta S\frac{{\partial }^{2}I}{\partial {x}^{2}}+\beta SI-\gamma I,$$

where D(x) reflects the spatially variable daily travel rate due to environmental factors, β is the daily infection rate, and γ is the recovery rate of infected individuals. The recovered class R can be computed from S and I based on the population conservation in a closed system. The details of the problem setup is in Supplementary Section S10.

One-shot learning is required for this practical problem

For an infectious disease, collecting comprehensive data is challenging and time-consuming. As the disease spreads, the underlying dynamics are likely to change due to mutations in the pathogen, changes in population behavior, or public health interventions like social distancing and lockdowns. These changes affect the model parameter diffusion coefficient D(x), which captures population movement patterns. Our proposed one-shot learning approach is particularly suitable for this scenario, requiring only a single data pair-for example, current travel patterns reflected in D(x) along with the susceptible S(x, t) and infected I(x, t) populations. A model trained from this data in one setting could be quickly adapted to predict the spread for another D(x). Unlike traditional modeling approaches that generally require large datasets for training, our proposed one-shot learning method enables predictions even with limited data, which can be valuable for effective epidemic response, especially in the early stages.

One-shot learning method setup and results

We then present the setup and results of our one-shot learning method. We use L = 1, T = 10, γ = 0.2 and β = 0.8 to test our method. The initial conditions are \(S(x,0)=1-0.5\cos (4\pi x)\), representing the susceptible population influenced by factors like population density or social behaviors, and \(I(x,0)=0.3{e}^{\frac{-{(x-2/3)}^{2}}{0.045}}\), modeling an initial localized infection (Fig. 9a). The zero Neumann boundary conditions ensure no flux. We aim to learn a solution operator \({\mathcal{G}}:D\mapsto (S,I)\) and predict the solutions S and I for a new \(D=0.001{e}^{\frac{-{(x-0.5)}^{2}}{0.08}}+0.001\Delta D\). We show the training dataset \(({D}_{{\mathcal{T}}},{S}_{{\mathcal{T}}},{I}_{{\mathcal{T}}})\) in Fig. 9b.

a The initial conditions of S and I. b The training data includes a random \({f}_{{\mathcal{T}}}\) generated from GRF and the corresponding solutions \({S}_{{\mathcal{T}}}\) and \({I}_{{\mathcal{T}}}\). c Prediction examples of FPI and cLOINN-random for a test case with σtest = 0.3.

We use the local solution operator \(\widetilde{{\mathcal{G}}}\) defined in Supplementary Section S10, and test the method for new cases with σtest = 0.10, 0.15, 0.20, 0.30. In all cases, FPI works well, and the L2 relative errors achieves below 3% (Table 1). cLOINN-random performs slightly worse than FPI. We show one example of S and I prediction using FPI and cLOINN-random approaches in Fig. 9c.

link